If you’re familiar with the term data warehouse, you’ll know that it’s a system for storing structured data for business intelligence and reporting purposes. However, as businesses have started to appreciate the value of unstructured data, such as images, videos, and voice recordings, a new type of framework called the data lake has emerged. While the data lake is a powerful and flexible infrastructure for storing unstructured data, it lacks certain critical features, such as transaction support and data quality enforcement, leading to data inconsistency.

To address these issues, a hybrid architecture was needed that could store both structured and unstructured data. This led to the development of the data lakehouse, which unifies structured and unstructured data in a single repository. Organizations that work with unstructured data can benefit from having a single data repository instead of requiring both a warehouse and a lake architecture.

Data lakehouses allow for structured and schema just like those used in a data warehouse to be applied to the unstructured data type that is typically stored in a data lake. This enables data users, such as data scientists, to access information more quickly and efficiently. Intelligent metadata layers can also be used to categorize and classify the data, enabling it to be cataloged and indexed like structured data.

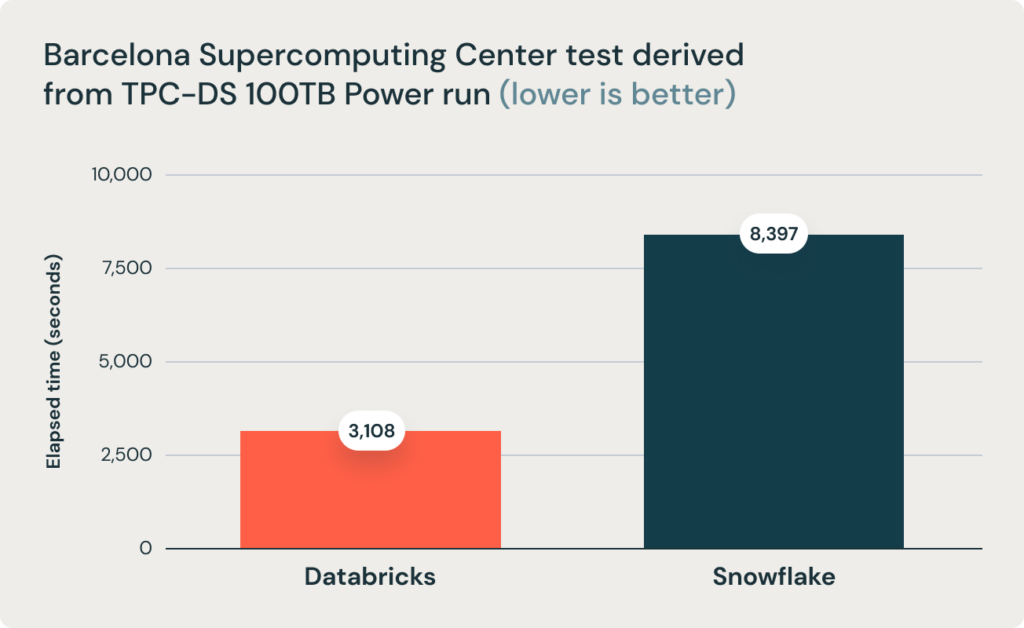

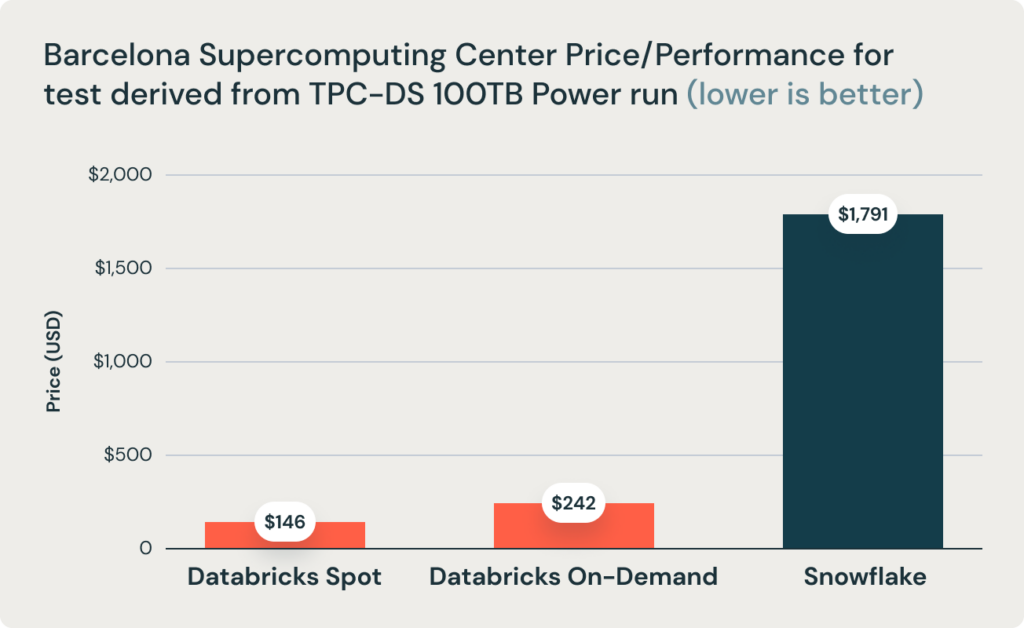

Data lakehouses are particularly well-suited to inform data-driven operations and decision-making by organizations that want to move from business intelligence (BI) to artificial intelligence (AI). They are cheaper to scale than data warehouses and can be queried from anywhere using any tool, rather than being limited to applications that can only handle structured data, such as SQL.

As more organizations recognize the value of using unstructured data together with AI and machine learning, data lakehouses are becoming increasingly popular. They represent a step up in maturity from the combined data lake and data warehouse model, and they will likely become simpler, more cost-efficient, and more capable of serving diverse data applications over time.